Evolutionary Computing

8 okt. 2013This lecture had 4 topics inspired by nature.

Genetic Algorithms (GA)

We looked at genetic programming in machine learning I last year, and the recap is that we try to use evolution's encoding of design in genes, combined with selection, crossover and mutation to guide our search. In other words, we start off with a "population" of genes representing some values of the properties we want to optimize. The goal is to find the optimal value be it minimum or maximum. The algorithm will test these initial genes for their fitness and select randomly from them a subset that will be able to form the next generation proportional to their fitness (tournament or roulette).

http://rednuht.org/genetic_cars_2/

The act of generating the "offspring" is called crossover and consists of mixing the genes of the lucky winners, which means that the covered search direction will be determined by the current best solutions. It is therefore very important to have as much variation as possible to start with. In addition to crossover, it is also essential to have some random mutation. Basically random changes to the genes. It does not have to be a high probability, as that would lead to degeneration, but some is necessary and will assure that it is possible for the algorithm to consider states that have been lost. One of the main reasons why GA is popular is that it's easy to enable parallel computing.

The algorithm will, if implemented correctly, keep getting better and better results, but is also very slow, and they tend to be context sensitive as the encoding of genes, fitness and crossover functions must be carefully designed.

Genetic programming (GP)

Is the same idea as GA, but extends it to include functions in addition to parameters in the search space. Instead of encoding parameters as a string, trees are used where the end nodes are parameters and the other nodes are functions. These functions can be very simple or very complex. The algorithm will then evolve combinations of these functions that can express even more complex things.

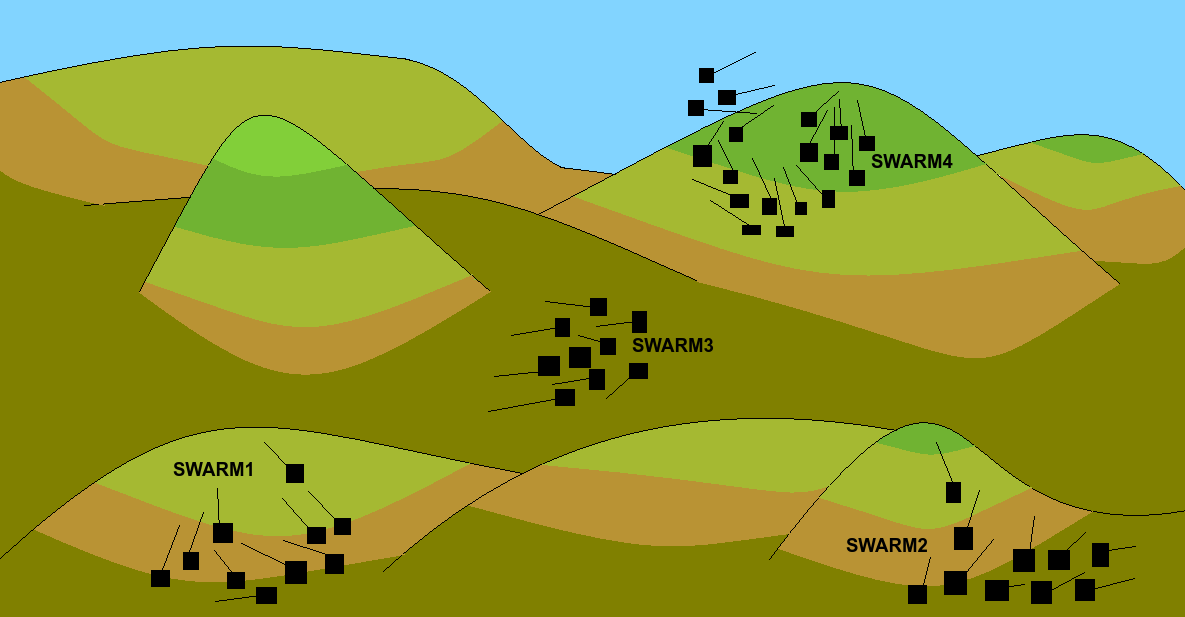

Particle Swarm Optimization (PSO)

This search method is inspired by how flocking occurs, like for example with birds. A "bird" or particle will have a direction, speed and knowledge of the terrain "fitness" directly under itself. They also remember the previously best observed locations and try to fly towards areas with better fitness. They can communicate findings with other close particles, and this in turn will make others follow when something better is found. The latency will result in a broad search. There is also implemented support for collision avoidance between particles and different swarms so to avoid searching the same solution space multiple times at the same time. The particles also want to stay close, but can be programmed to split into new swarms given some criteria. We say the information is encoded in the swarms particles, and the method is often applied to continues search spaces.

What the swarms might look like in 2-D (3rd dimension would be the fitness in at that location)

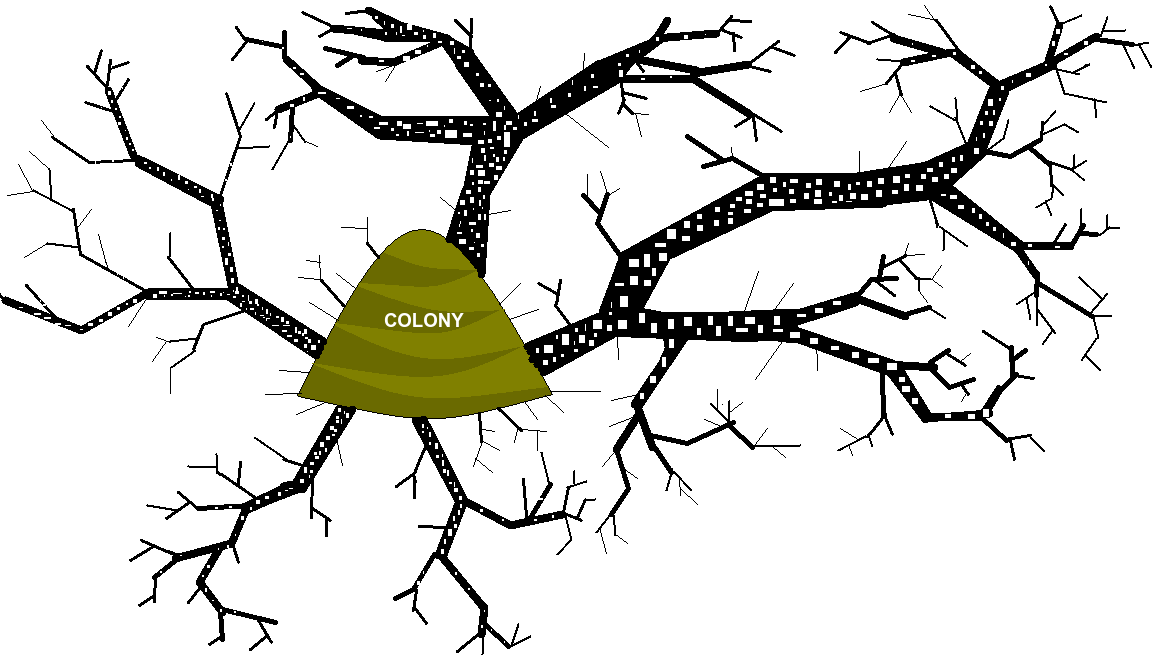

Ant Colony Optimization (ACO)

Is often used for discrete search spaces, and examples of usage are the traveling salesman. The idea is that very simple ants move around at random, but are more likely to travel where pheromones are placed out by previous ant that scored the highest the last round. The level of pheromones decrease over time and there are different solutions to what ants are allowed to lay out pheromones and how much. The ants try to find good paths in graphs, and the memory of the system is "in the world" encoded as pheromones.

Ants go where the pheromone is the strongest, and the knowledge is encoded in the world