Applied InfoSec (part1)

24 okt. 201225th october 2012 - Session 1: Security assumptions in Operating Systems

It's important to understand the computer history to understand the current and coming security aspects of computer usage. One of the main principles of computing is "black box abstraction", hiding complexity in a box, define input and output so that is can be used to build bigger black boxes. One thing often forgotten in this process are the assumptions made along the way.

In the early days when computer hardware was really expensive big companies had these huge mainframes serving "dumb" terminals. As time went by and hardware got cheaper (and faster) it transitioned to "personal computers" doing all the processing locally. More and more devices are being connected, and the current usage trend is smaller devices like smartphones and tablets while more and more of the storage and some processing is moved back to our ages "mainframes" in the cloud. The same has been true for e-mail for quite a while, and more and more services are web-based as a result of more mature technology and the need to be synchronized and to achieve a higher availability of our data. At the same time small embedded computers in coffee machines and washing machines are getting connected. Even cars are going online these days, and we've already seen successful attacks on locking mechanisms and early attempts at overriding more critical components like breaks, acceleration and fuel systems. We are getting more and more network centric. How secure can we be? What assumptions are being violated when connecting more and more gadgets to the global network?

What is the treat model?

Software is tightly dependent on the hardware supporting it. Software security alone is totally useless without some physical security. Given physical access to a machine about anything can be manipulated. Like in the example of cars, the assumption was that the network buss would only host trusted identities, thus no access control has historically been implemented. Now what happens when we suddenly connect external potentially unfriendly media connected to the cars media center and external network?

Some terms:

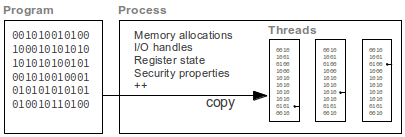

- Program: is used to describe the actual code for running an application, usually stored on a permanent medium

- Process: is a framework created by the operating system where an instance of a program is loaded into. It has resources like memory and I/O devices associated with it.

- Thread: A process will contain at least one thread (of execution), and can split in several threads performing different tasks. We think of it as the programs instructions being run on a processor. The state of the thread is determined by the program counter.

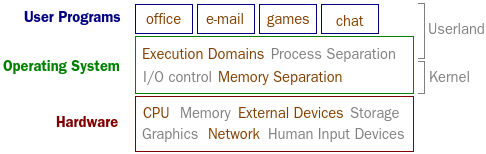

So, what is an operating system (OS)? Basically it's an insulating layer between user programs and the actual hardware allowing for sharing of resources like processing power, memory, and I/O (input/output) like permanent storage/file system, network access, mouse and keyboard. In addition it's not uncommon with a graphical user interface setting the "framework" for the user applications run on top. One reason for this is simply to avoid each user program to need specific knowledge about every kind of sound card, network card and graphics. Instead these common tasks are given to the OS and in turn the OS will give the user programs defined interfaces for playback of sound and drawing objects on the screen. Another aspect has to to with how resources are shared: How to keep

- competing programs from accidentally bugging each other and

- them from maliciously causing harm to each other

Security is often divided in three main categories: Confidentiality, Integrity and Availability. Due to the traditional focus of Confidentiality a "fail-stop" approach is not unusual. If something bad happens, just terminate. There are implications for this kind of approach. Like in real time systems where it's essential that high priority tasks continue. Even in systems where confidentiality is very important, it might still not be feasible to do security upgrades cause of expensive and time consuming certification processes.

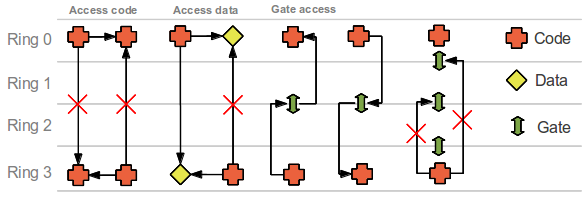

CPU multiplexing - Executive domains

In the early days we actually had computers with OS'es running only one process. If you wanted to switch you would first have to quit the current one. Other failing methods have been tried, so called "co-operative mode" where one process could choose to go to sleep and let another process continue. The obvious issue here would be, what happens if a process won't let go or freezes? It's easy to blame the designers, but remember why such an implementation was made because of limitations in hardware. Later we got support for interrupts in hardware enabling the OS to force back control within a certain time limit or a defined event. The main method of process separation includes layered rings of access. Some of the initial hardware implementations had assigned 1 bit for this purpose, 2 states in other words. Modern processes got more, 4 states for x86 but still only 2 of the states (ring 0 and ring 3) is in use. One particular now historical hardware implementation called Multix had 8 rings. The rings have gates the processes has to query in order to access resources in different rings. One major issue is the distribution of shared library across different rings and the overhead of the gate keeping. Another important aspect is the time of check. How to verify arguments has not been changed since they were presented. This kind of separation is called execution domain while when we thing of separation between processes at the same security level we think of process separation. It is tightly related to memory separation.

MIT started a project "multiple access computer" MULTIX in 1963 where hardware and software were co-designed to support strong enforcement of execution domains. 64 rings were proposed but only 8 ended up being implemented in hardware (Honeywell 6180). The ring and gate model is derived from this project. It's interesting to note that x86 security has not changed much since 1985...

One interesting aspect of execution domain separation is the "Ctrl Alt Del" command known from Windows user log in. When these combinations of keys are pressed, the CPU interrupts any activity and gives command to the operating system. In this mode key inputs from IO devices are not forwarded to user programs but kept in the kernel allowing for secure entry of the password. It is also noteworthy that Linux does not have this functionality. Interrupts can be both hardware and software (trap) and naturally a hardware interrupt is more trustworthy.

Inter Process Communication (IPC) are used for enabling communication between otherwise separated processes. One natural way of communication between processes without any protection would be shared memory locations. Other methods could be messaging and using shared files.

Another aspect of multiplexing the decision making part of the computer and all the house keeping related to the task is the difficulty of proving any real time performance. Real time simply means that a task is guaranteed to complete within a certain time span. The period in itself is not that important. It just have to be fast enough. Windows and Linux is not real time.

The process of changing the running process is called context switch.

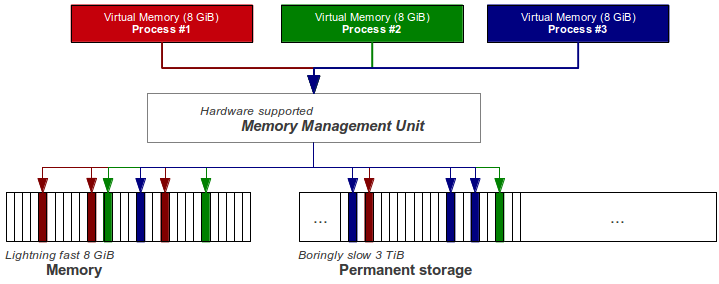

Memory multiplexing - Protection

Memory (ROM) was initially quite expensive, but was and still is essential in order to deliver procedures and data to an otherwise bored processor. The first methods of multiplexing was dividing it in blocks and assigning each process a chunk. It was discovered that access to memory followed certain patterns (linear and loops) and did not usually jump around randomly, so called "Locality of reference". Thus at a given time the processor did not need all the memory the program contains or would ask for in a worst case scenario. Historically whole processes were moved back and fourth to disk to free up available space, called swapping, but now the current solution is to divide memory into small chunks called pages. Each process got it's own address space (virtual memory) and the OS in tight cooperation with hardware make sure these pages are allocated on demand transparently, even moved to permanent disk when no longer in active use to free up available physical space. When a page is being asked for without being present we have a page fault. Modern hardware architectures got some additional protection mechanisms like execution bit that can be set on or off, and privilege bit used to enforce the execution domains. Sadly we got some old legacy "backwards compatibility" issues like execution mode and the CPU can be asked to change to this mode enabling bypass of security features.

I/O devices - Controls

We got two classes of IO devices: block devices and character devices.

A block device is defined as a device that the processor might ask for a random block from. For example a hard drive.

A character device delivers and accepts a stream of data, like a keyboard. It has no memory.

Many devices fall in between these to, like network links thought of as a character device but with more logic associated with it like routing and the possibility to ask for re-transmission. A tendency in modern computing is that devices are getting "smarter", containing more circuits for logic and own memory. In a state of the art computer, it's not unusual the graphics card (GPU) would have more "raw" power than the central processing unit (CPU). The CPU is of course more flexible, having more advanced instruction sets, but still... The devices are getting "semi autonomous", offloading work from the CPU.

One important category of IO is networking, and it consists of many layers known from the OSI model: Physical, data link, network, transport and if we concentrate on the current TCP/IP stack we go directly to the application layer. On each of these layers we can implement security. And often it must be implemented on several stages. A lot of the check sum and encryption related tasks are now being performed on the networking IO device instead of directly on the central processing unit.

Another important device is the permanent storage, typically a hard drive based on magnetic plates or solid state flash memory. Although the technology differs, what the user applications sees is usually a hierarchical file system based on files. Spinning hard disks used to be fairly dumb, being controlled almost entirely by the CPU in the old days. Now days much of the low level functions are done by integrated logic only simulating the old interface. This could have consequences like when you ask the drive to override an area of the disk specified by an address, you are no longer guaranteed the disk will actually do the intended operation on the hardware, like on SSD (Solid State Disk) drives to avoid unnecessary wear and tear. It's also important to realize the distinction between deleting and erasing data.

One important technology Direct Memory Access (DMA) is important related to security. Since devices are getting smarter and the fact the CPU is not very good at I/O operations we got this mode where the CPU could simply ask the device to copy the data to a specified location in ram. Problem is it is assumed complete trust in the device and no measure is implemented to avoid misbehavior like copying to a different location or reading whatever. This is related to the fact that any device on the internal buss like USB and FireWire has full access to internal resources.

Covert channels attacks will be one of the topics of IMT 4541.

Graphical User Interface

The GUI of modern operating systems is particularly vulnerable since it's a huge part of the code base while it at the same time in under a huge pressure for change. It's usually not publicly accepted that a new version of an operating system looks like the old one. Users want new functionality and prettier designs.

Design principles

Saltzer and Schroeder (1973)

- Economy of mechanism: "Keep it simple, stupid" KISS. Don't implement more complexity than absolutely necessary.

- Failsafe defaults: Exception handling (invalid input) and "default deny" for permissions. When it comes to exception handling, a commonly used method called fuzzing is used to "brute force" different combinations of input to check what the function might do something strange because of inadequate input validation.

- Complete mediation: Any access to objects (by any subject) must be checked. There are circumstances where identification cannot be afforded, like in control centers where quick reaction is critical, but otherwise..

- Open design: Avoid security by obscurity, related to Kerckhoffs's principle: "Only the key in a cryptographic system should need to be secure"

- Separation of privilege: Avoid "all or nothing". Like separating access to logs, security settings, etc. No single point of failure.

- Least privilege: A subject (user, process..) does not need access to more functionality than necessary to perform its function.

- Least common mechanism: Mechanisms used for authentication should not be shared. One example could be the difference between uniquely salted and same salted password hashes in a database. It the salt is the same, the same mechanism is used. If different salts are used we move towards the goal of this principle (Bcrypt might be a more secure alternative).

- Psychological acceptability: The human effect. If security gets to complicated or time consuming, we will find a workaround reducing the security a great deal.

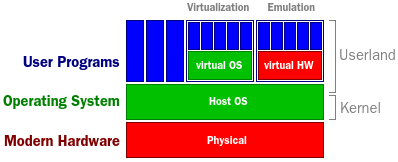

Virtualization and emulation

Virtualization is a technique used for different purposes. Virtuality is kind of difficult to explain, but it has to do with pretending to be something it's not. You could create a disk drive in memory and pretend it's a normal disk as long at the power is on. As soon at the power is lost, underlying presumptions break and the disk is lost. Another example is virtual CD/DVD drives simulating an actual medium inserted from a file. Virtualization is often used to separate operating systems from hardware in order to be able to move them around on the fly depending on the current load, and at the same time being able to run many server/operating systems on single hardware for utilize all available resources (load balancing). It is also often used for security by the argument malware would be trapped inside the virtual operating system and not be able to mess with the host. A problem with this assumption is that current operating systems only use 2 of the 4 hardware rings for protection. There simply isn't any available hardware enforcement to ensure this kind of protection. The process separation in the virtual machine would have to be based on software.

A third use mentioned is running old legacy software on modern hardware. This is normally termed emulation, and the idea is to transfer operations required for the legacy programs in software to simulate the same behavior. This process is costly in terms of performance and the fall pit is the same as with virtualization. Assumptions no longer valid about hardware backing. Typical examples are banking software written decades ago that are too complex or unreadable for current programmers it's more cost efficient to build wrappers around wrappers to keep it running. Other examples often seen are emulators for old gaming consoles like Nintendo and Sega. Other mentioned terms: binary translation: Code is analyzed and changed prior of or on the fly, substituting privileged code with "safe" code. Problematic areas can be synchronization of program counter, complex states that can be difficult to maintain, and handling of self-changing code (polymorphic)

Virtualization made a huge improvement during 2005/2006 when CPU vendors added virtualization support to their chips. The original idea was to do the housekeeping in software making sure the individual virtual host did not do anything that could harm the other hosts. This caused a lot of extra CPU cycles. Now with hardware support the virtual manager can pass most command directly to the CPU knowing the hardware will trap (exception/fault) if something illegal would happen