Crossfire nightmare

15 okt. 2009Tags: Computer

Update:

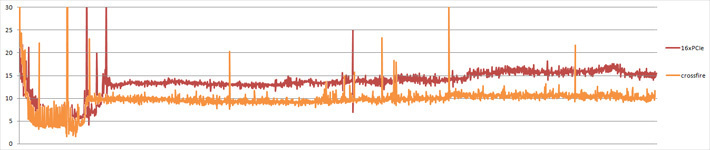

After installing the new motherboard, an X48 with dual 16xPCIe v.2 lanes, I finally got a representable result.

Down from about 15ms to 10ms. The variance is gone. This is still the first seconds of "The Bog" (Call of Duty 4) intro on all high at 720p.

It all started back when I bought an extra ATI Radeon 4850 graphics card (GPU). One card only supports two displays at the same time, so adding one more would allow me to connect up to four. My motherboard, a P35-DS4, had crossfire support (it said on the box) so it would be interesting to test it. After plugging it in, enabling crossfire in the driver and installing some of my newer games all over again (I just installed Windows 7), I was ready to blast off.

But no. Frame rate wasn't bad, but worse than for a single card, and it felt like way lower than the counter said. Why would two card do worse than one?

The first thing to do when something isn't right, is to "google" it and see if somebody else has the problem. No obvious results. I noticed a test-review comparing performance with different PCI express (PCIe) buss speeds. It was clear that performance was lower with a board only supporting 4x compared to the full 16/32x slots, but none that were below 100% of a single card.

My motherboard had to my surprise only 4x PCIe speed on the secondary lane. Until now I thought that the length of the lane decided that speed was used. My board also has this second lane connected to the south-bridge making bandwidth and latency even worse.

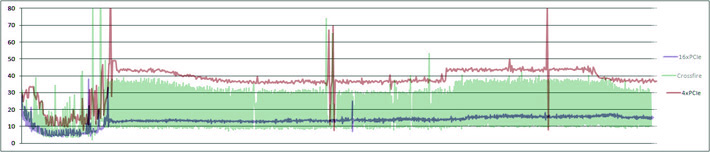

After a lot of testing and reading, I noticed something strange about the spacing between frames. It was a mere coincidences that I stumbled upon this while I opened the frame log from fraps in an excel spreadsheet and tried to draw different aspects of the data. Look at this graph:

A lower score is better, meaning that the time between frames is low, giving a higher frame rate. The blue line is the card inserted in the 16x speed slot. The red being one in the 4x slot. When combined in crossfire, I got the green thick graph. At a closer look, you would see it jumping up and down like crazy.

The problem was already solved, and a new motherboard already ordered. But what is actually going on? The Central Processing Unit (CPU) originally rendered everything and wrote it to the frame buffer in the graphics card. CPUs wasn't that fast, and it has for a long time been cheaper to produce specialized hardware to offload the CPU, called a Graphics Processing Unit, than to make the CPU faster. The load balance between the two cards has changed over time.

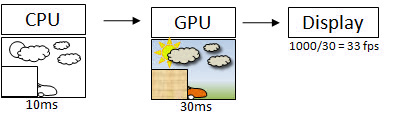

The CPU usually calculates the physics, do all the game-logic and sends the frame to the GPU as "vectors". The GPU then "colors" it, adds effects like lighting, shadows and fog.

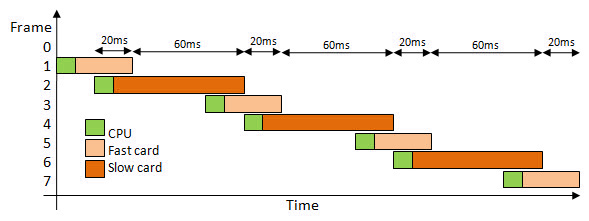

This simple drawing (made in PowerPoint) illustrates the process. When it comes to improving performance, it's important to look the the weakest link. If the GPU is overloaded, all the CPU power in the world won't make a difference. In our example, the CPU uses 10 ms to render the frame. The GPU in turn needs 30ms.

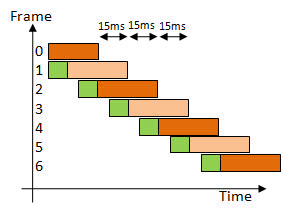

When adding a second card, the CPU can send every odd frame to the first GPU and the even to the second. This is the way crossfire is usually configured, and is called Alternate Frame Render mode (AFR). In theory, we could double the framerate as long as the CPU can follow:

You see the CPU time represented with green. divide 1000 by the time in milliseconds and you get the frame rate per second. Since the CPU and the GPU is in a pipeline, they can work on different tasks at the same time.

With AFR there is (logically) a limitation. You would not send frame #3 before #2 is complete. This is what would happen if the second card is somehow slowed down. In my situation the motherboards is slowing down the second PCIe lane.

The 16x test ran very smooth. The 4x one was noticeable less smooth, but still playable. The crossfire one on the other hand was really bad. FPS being higher and worst time between frames being lower. In Windows XP, you are allowed to make ready a few frames ahead of time at the cost of higher input lag. With one card you would normally not want to render ahead since you are not going to have them processed by the GPU any faster anyway. With multi GPU, things change. Here you want to render alternate frames always feeding the free GPU.

Input lag is basically the amount of time it takes from you perform an action (pressing a key, moving the mouse etc) and until the response is shown on the display. You have delay in the input device, in the OS, time to process in CPU and GPU, reading the frame buffer and for the display to update the pixels. The computer can't predict what you will do in the future, so rendering ahead makes the lag worse because it has to be done with "old data"

Looking at the latest graph, you will see that the actual changes to the image only happens when the CPU is doing work. The green boxes. We get two rapid updates followed by a long break. The figures numbers are rounded and scaled to make it easier to read. Looking back at the first graph we see that the result is still better than for the single 4x speed setup. This "stutter" or extra frame is often called microstutter since it happens so fast. It could possible, just by interrupting the flow, be the reason for the perceived worse gameplay...